Wi-Fi Linux Server in a Lightbulb? Is the Internet-of-Things getting ridiculous?

I just recently moved from a house in a suburban neighborhood to an apartment block in a more built-up area. One thing that has driven me crazy about this is that Wi-Fi is basically no longer usable. There are about 40 near-full-strength Wi-Fi networks in range now, where before I could see only my own and my closest neighbor’s (and that one was very weak). Some of my new neighbor’s access points are set up for wide-band, so they hog two channels. From scanning the area with NetSpot, it looks like some of the neighbors know enough to disable wide-band and maybe move to a non-default channel, but most are on default settings. In my case even moving to a non-default channel doesn’t improve the situation much. I have both 2.4GHz and 5GHz band access points, but there are many other networks in range that cover both these bands.

Basically, unless I build a Faraday cage inside my apartment, I’m out-of-luck. I’ve had to run Ethernet to everything that has a physical port. Even my wireless/Bluetooth mice don’t work well in this soup of competing radios.

And then this week I saw this:

It looks like it’s a Raspberry Pi inside a lightbulb, a full Wi-Fi enabled Linux server, which seems excessive. I hope the intended use case for these is large commercial property and not residential…

Xamarin Forms in Visual Studio 2017

I recently had a chance to revisit the latest state of cross-platform mobile development in Visual Studio. I haven’t been able to spend as much time as I’d have liked to have done in this area. The prospect of being able to architect a single application that can run under three different UI platforms (iOS, Android and UWP) is very compelling.

I have in the past built out applications, based on the Model-View-ViewModel separated presentation pattern, that were able to push about 75% of the code burden into shared models and view models that could drive separate WPF, Silverlight and (to a more limited extent) ASP.NET MVC UI implementations. Unfortunately, at that time Silverlight was already being regarded as a dead man walking, even for internal line-of-business apps. User interfaces are by far the least stable aspects of any application architecture, and UI technologies seem to evolve rapidly, so an architecture that can keep the UI layer as thin as possible is valuable for keeping ongoing maintenance costs in check.

Xamarin Primer

Xamarin was formed by the original creators of Mono (a cross-platform .NET CLR), and was acquired last year by Microsoft, which then quickly offered the Xamarin bits as a part of Visual Studio. Their are two versions of Xamarin “Native” (for iOS and Android), which allow for native apps on those respective platforms to be developed in C#. There is also Xamarin Forms, which is essentially a subset of UI components that can be used to build an application that can be deployed to both iOS and Android, in addition to Windows UWP “Store” apps (also on the Mac, but I’m not planning to try that). The apps are still native to each respective platform – this is not a “common-look-and-feel” proposition like you would have had back in the Java Swing/AWT days.

The Initial Xamarin Experience in Visual Studio 2017

Coming from a WPF background, I’m very familiar with XAML and MVVM, so I expected that Xamarin Forms app development would be fairly straightforward. For me there are some early annoyances and rough edges:

- The ARM version of the Android emulator is painfully slow to start, deploy and run applications.

- The x86 version of the Android emulator is faster (supposedly 10x faster - that’s not what I observed), but requires Hyper-V to be disabled and the machine rebooted, which is unfortunate because I have several other active projects that use Docker, and that requires Hyper-V to be enabled. If I’m going to be doing a lot of Xamarin work, I’m going to be rebooting a lot. I guess developers that are working on an app that uses a Docker-deployed back-end to a Xamarin Forms front end are going to need two development machines.

- The iOS emulator won’t run at all unless you own a Mac and can connect to it on your local network. This is obviously not a big deal if you’re profiting from your application or have an employer or client that can foot the bill for an expensive spare device, but I’m not going to pay the Apple premium to experiment with Xamarin apps, so I concentrate mobile testing on Android.

- After starting the Android emulator manually up-front, the empty sample project worked just fine, which was encouraging. Unfortunately, for some reason, after I closed the emulator and Visual Studio and came back to the project later on, it wouldn’t deploy to the warmed-up emulator: “… an error occurred. see full exception on logs for more details. Object reference not set to an instance of an object”. After uninstalling and reinstalling the Mobile development components in the Visual Studio installer, this went away and I was able to consistently run the app. No idea what happened there.

- I hate to see warnings in the build output, and the empty Xamarin Forms project, out-of-the-box, shows several, which is sloppy QA. The first was complaining about a System.ObjectModel reference in the Android native project (it didn’t seem to matter when I removed the reference, so that’s probably an oversight of the project template). There were also several other warnings about the version of the Android SDK: “The $(TargetFrameworkVersion) for Xamarin.Forms.Platform.Android.dll (v7.1) is greater than the $(TargetFrameworkVersion) for your project (v6.0). You need to increase the $(TargetFrameworkVersion) for your project”. This is weird, because you’d think that the template would match the SDK references to the default version of Android targeted (6.0). Since I hate to see warnings in build output (did I mention that I hate to see warnings in build output?), I thought I’d take a stab at fixing those. I used the Android SDK Manager to install the 7.1 bits and then set the target Android framework to 7. Built OK, no warnings. Happy. Unfortunately it failed to deploy to the emulator, because aapt.exe crashed hard with no error messages. In hindsight, since the emulator is Android 6.0, I guess it should have been obvious to me that this wouldn’t work, but the tooling didn’t really help a novice out. I took a quick look around online for a 7.x version of the emulator for Visual Studio but wasn’t able to find one. I guess I’ll have to hold my nose and live with the warnings.

- If you specify a DataType for a DataTemplate (so that you can eliminate Resharper warnings on bound properties) they don’t seem to render anything. No errors or warnings in the logs – your page simply stays blank. You have to leave your DataTemplate types open and ignore the squigglies. I hate squigglies.

I’m now looking at converting the PCL that represents the core Xamarin app logic into a .NET Standard library instead. This allows for a larger target surface area of the .NET API and simplifies the project files, but requires some manual work to convert (again I don’t see any tooling support for this yet in Visual Studio). Stay tuned for how that goes…

Docker Support in Visual Studio 2017

Having experimented with Docker on Windows about a year ago (on a Technical Preview of Windows Server 2016), I thought I’d take a quick look at how container support has evolved with the latest version of the operating system and dev tools. At the time the integration of Docker into Windows was fairly flaky (to be expected for such a bleeding edge technology), but I was able to get a relatively large Windows-dependent monolith to run inside a set of Windows Containers. Most of this involved crafting Dockerfiles and running docker commands manually (and eventually scripting these commands to automate the build process).

Docker tools are now included in Visual Studio, so you can enable Docker support in a new (web) project when you create it:

Alternatively, you can add Docker support after the fact from the project context menu:

Either way, you get the following:

- a default Dockerfile in your project. This is for packaging a container image for your service automatically.

- a default docker-compose.yml file in your solution. This is for orchestrating the topology and startup of all of the services in your solution.

- a docker-compose.ci.build.yml file in your solution. You can actually spin up a “build” container in order to run the dotnet commands to restore Nuget packages, build and publish (stage) your binaries and dependencies. This is great because now even the build process will be consistent across all developers machines (and the central build system), so in addition to eliminating the “runs on my machine” problem, it also alleviates any “builds on my machine” problems, where developers might have different tool versions or environment setup.

The first time you run, there’s a significant delay while the appropriate image files are downloaded, but this is a one-time hit.

Your service image contains a CLR debugger, which Visual Studio can hook up to, so you can step through your service code.

What’s interesting (and not a little surprising) is that if you’re targeting .NET Core, your image will target a Linux base image by default. Docker for Windows also defaults to running containers in a Linux Hyper-V VM, so when you hit F5 the container will run your .NET Core service on Linux, and you’ll be remote debugging into a Linux machine.

All very cool stuff, and works well right out of the box without too much fuss. Of course, you can also do all of this manually from the command-line if you prefer to develop in a lightweight editor like VSCode.

Microsoft Azure Hybrid–At Last!

From Reuters: http://www.reuters.com/article/us-microsoft-azure-idUSKBN19V1KQ

I think many enterprises have been waiting for this for a long time, and will trigger a significant uptick in Azure usage. There are a couple of things about the cloud that instill resistance for enterprises:

Privacy/Security/Regulatory. Especially in finance, and especially in European jurisdictions, there are often regulatory requirements around where data can physically reside, so in some cases the shared public cloud isn’t even an option. Even without regulations, putting sensitive data in the cloud has made enterprises nervous.

Development cost. Unless you’ve demonstrated spectacular foresight and discipline with your application architecture (and you haven’t), there’s going to be some redesign work required in order to use the cloud effectively. That’s going to take a while to build and test, and you’re going to be paying for your cloud infrastructure through all of that as pure overhead until you go live.

The ability to run some parts of your applications on the cloud and some on premises, yet still use the same tools, patterns and skillsets across both, largely dismisses these two hurdles. The holy grail is that you can keep your sensitive data and services locally on premise, while still taking advantage of the ability to scale up public-facing front-end applications, and for development and testing you can utilize a “local Azure”, running on hardware you own, that looks and feels like the shared public version, without incurring a significant cost overhead. When you’re happy with your solution, you can easily move the less sensitive parts to the public cloud, because it’s the same animal you’ve been using on-premise.

I’m looking forward to experimenting with Azure again to see how flexible this hybrid approach is in reality.

Disappearing Scrollbar in Edge

This has been bugging the hell out of me lately. I’m not sure when Edge started doing this, but it’s really quite annoying if you’re accustomed to using the vertical scrollbar on the right of the browser to “page” down or up by clicking the areas above and below the actual scrollbar “thumb” (the bit you can drag to scroll quickly). It doesn’t seem to happen on every site, it seems to be consistent on sites with content that spans all the way to the right-hand edge.

When reading a long blog post or web page, I usually position the mouse pointer near the bottom of the scrollbar and click to page down through the article as I progress. Unfortunately, in Edge the scrollbar now entirely disappears after a few seconds of mouse inactivity, and doesn’t automatically reactivate on a mouse click – only when you move the mouse pointer completely off the area where the scrollbar used to be, and then back over to where it should be. I find myself constantly having to look over to the right in order to perform that little dance every minute or so. Very distracting. I guess I could use the keyboard for Page Up/Page Down, but on laptops nowadays you need to use the Fn key to get those, and I find a left-click more convenient.

I wonder who thought this would be a good idea? I suppose there’s some non-mouse, non-desktop scenario (mobile?) where it makes sense, but on desktops it certainly violates the Principle of Least Astonishment, and since I don’t see any options to turn the behavior off, I guess I’ll have to live with it or switch back to Firefox…

Graph Databases and Neo4j

My latest consulting project made heavy use of the graph database product Neo4j. I had not previously had an opportunity to look at graph databases, so this was a major selling point in accepting the gig. I had also suffered through some major pain with relational databases via object-relational mapping layers (ORMs), so I was keen to experience a different way of managing large-scale data sets in financial systems.

I won’t (can’t) for various reasons provide a lot of detail about the specific use cases on this project, but I’ll put out some thoughts on the general pros and cons of graph databases based on several months of experience with Neo4j. I think I’m going to be using (and recommending) it a lot more going forward.

What is a graph database?

A graph essentially consists of only two main types of data structure: nodes and relationships. Nodes typically represent domain objects (entities), and relationships represent how those entities, well, how they are related to each other. In graph databases, both nodes and relationships are first-class concepts. Compare this to relational databases, where rows (in tables) are the core construct and relationships must be modeled with foreign keys (row attributes) and JOIN-ed when querying, or to document-store databases, where whole aggregates model entities and contain references to other aggregates (which usually must be loaded with separate queries and in their entirety in order to “dereference” a related data point). Depending on your use cases these queries can be complex and/or expensive.

Why graphs?

Performance. If your data is highly connected (meaning that the relationships between entities can be very fluid, complex and are equally or more important than the actual entities and properties), a graph will be a more natural way to model that data. Typical examples of highly connected data sets can be found in recommendation engines, security/permission systems and social network applications. Since all relationship “lookups” are essentially constant-time operations (as opposed to index- or table-scans), even very complex multi-level queries perform extremely efficiently if you know which nodes to start with. Graph folks term this “index-free adjacency”. Anecdotally, over the course of the last few months, with a database of the order of a hundred million nodes/relationships, executing queries that sometimes traversed tens of thousands of nodes across up to a dozen levels of depth, I don’t think I saw any queries take more than about 100ms to complete. For large result sets the overhead of transferring and processing results client-side was by far biggest bottleneck in the application.

Query Complexity. Queries against graph data can be a lot more concise and expressive than an equivalent SQL query that joins across multiple tables (especially in hierarchical use-cases).

What are the downsides?

Few or no schema constraints. If you’re used to your database protecting you against storing data of the wrong type, or forcing proper referential integrity according to a strict data model definition, graph databases are unlikely to make you happy. Even a stupid mistake like storing the string version of a number in a property that should be numeric can lead to a lot of head-scratching, since the graph will happily allow you to do that (but won’t correctly match when querying). At first I thought this was a deal-breaker, but it’s not as big a problem as you’d think if you’re following test-driven disciplines and institute some automated tools for periodic sanity/consistency checks. You’re not waiting for a customer to find your query/ORM-mapping bugs at run-time anyway, right? Besides, a typical major pain point in RDMBS/ORM-based systems are schema upgrades as your rigid data model changes, especially when relations subtly change shape between versions.

Hardware requirements. Optimally, in production you’re going to want a dedicated multi-node cluster where each node has enough RAM to potentially keep your entire graph in memory, so budget accordingly.

Neo4j

Neo4j is one of many graph databases (this technology is very hot right now and new ones seem to pop up every week). It is widely used by many large corporations and they are well ahead of the alternatives in terms of market share. I don’t want to give a full detailed review of Neo4j in this post, but their main selling points are:

ACID compliance. Many NoSQL technologies sometimes can only offer “eventual consistency”.

High availability clustering. Neo4j recently introduced a new form of HA called “Causal Clustering”, based on the Raft Protocol, which I haven’t yet had a chance to evaluate. At first I read this as “Casual Clustering” and had visions of nodes deciding arbitrarily on a whim whether or not they wanted to join or pull out of the pool or respond to queries… The older form of clustering replicates the graph across multiple physical nodes, and nodes elect a “master” which will be the primary target of all write operations. Non-master nodes can be load-balanced for distributed read operations.

Integrated query language (Cypher). This is a very elegant and succinct (compared to SQL) pattern-matching language for graph queries. Statements match patterns in the graph and then act on the resulting sub-graph (returning, updating or adding nodes and/or relationships).

Web interface. The interface is very nice and offers great visualizations of graph results. You can issue and save Cypher queries, perform admin-level operations and inspect the “shape” of your graph (i.e. what node labels and relationship types you currently have).

Flexible APIs. There is an HTTP REST interface, but they also now offer a TCP binary protocol called BOLT which is much more efficient.

Native Graph Model. Neo4j touts the purity of the graph model over other alternatives which attempt to mix and match different paradigms (eg. documents, key/value store and graph). I don’t have any direct experience of such “multi-model” databases, but I plan to compare Neo4j with other graph database products on a project in the future, so stay tuned.

Extensibility. You can add your own procedures and extensions in Java (very similar to CLR stored procedures in SQL Server).

Support. I can only speak to the enterprise-level experience, but Neo4j are very quick to respond to support questions and really stick with you until the problem is resolved.

On the whole, I think graph databases in general, and Neo4j in particular, have a bright future ahead.

Anyone else using graphs yet?

Project.json Nuget Package Management

Recently we've been looking at the new approach to Nuget package management in .NET projects, dubbed Project JSON. This initiative started in the ASP.NET world, but seems to be becoming the preferred method for .NET projects to declare Nuget package dependencies. The new approach changes the way that packages are resolved and restored, and has some very useful advantages.

Background

The larger context for looking at this was that we're starting to push several of our core framework assemblies into a separate source control repository, separate from the rest of the applications and modules that make use of those core components to create shipping products. The core components use Semantic Versioning ("product" releases are versioned according to Marketing!), are delivered as Nuget packages internally to our developers, and are no longer branched along with the Main codebase – they follow a “straight-line” of incremental changes.

We expect to gain a few benefits from this. First, developers will not have to spend compute cycles compiling the core components. Second, there will be a natural barrier to changing the core components. This may be viewed as an advantage or a disadvantage depending on your outlook, but the idea is that over time the core components should become and remain very stable (in terms of rate-of-change, not code quality), and "ship" on their own cadence. If core projects are all simple project references alongside the application code in the same solution, it's very easy and tempting to make small (sometimes breaking) changes to these components while working on something else, without necessarily fully considering the larger impact of those changes (or independently verifying the changes with new unit tests).

Perhaps a core architecture team will be responsible for the roadmap of core framework changes, and the resulting packages will be treated like any other third-party dependency by application and product developers. It's important that changes to the "stable" core components are carefully planned and tested, since many other components (and possibly customer-developed extensions) depend upon them. The applications and business modules that ultimately form shipping products are less "stable", in the sense that they can change quite frequently as customer requirements change.

However, there are also some fundamental implications to doing this. Developers still need to be able to step into core component code when debugging. Nuget packages support a .symbols.nupkg file alongside the actual binary package, and build servers like TeamCity can serve up those symbols to Visual Studio automatically when stepping into code from the package.

In addition, released software should be resistant to casually taking new (or updated) Nuget dependencies once shipped, since this can complicate "hotfixes" and customer upgrades. The Main branch, of course, representing the next major shipping version, could update to the latest dependencies more freely.

Nuget today

By default, Nuget manages package dependencies via a packages.config XML file that gets added to the project the first time you add a reference to a Nuget package. The name and version of the dependency (and, recursively, any dependencies of that package) is recorded in the packages.config file, and Nuget will use this information during package restore (which will usually take place just before compiling), to obtain the right binaries, targets and propeties to include in the compilation. In addition to the entries in packages.config, references are also added to your project file (e.g. the .csproj file for C# projects). Some packages are slightly more complex than simply adding references to one or more managed assemblies, however (for example those that may include and P/Invoke native libraries). In these cases, even more "cruft" is added to the project file, to manage the insertion of special targets and properties into the MSBuild process so that the project can compile correctly and leave you with something runnable in your bin folder.

Resolved packages are usually stored in the source tree in a packages folder next to the solution file, and you usually want to exclude these from source control to avoid bloating the codebase unnecessarily.

Another snag to watch out for is potential version conflicts between projects that call for different versions of the same dependency. In the packages.config file, it's possible to declare that you want an exact version of a dependency, but by default you're saying "I want any version on or after this one", so if multiple projects ask for different versions, Nuget is forced to add an app.config file to your project with the appropriate binding redirects, to allow for the earlier references to be resolved at runtime to the latest version.

When you update a dependency to a newer version, every packages.config file that refers to the old version, and every project file that contains references to assemblies from those packages, and every app.config that has binding redirects for those assemblies, needs to be updated. This produces a lot of churn in source control and actually can take a while on large solutions with many dependencies.

Clearly there are a lot of moving parts to getting this all to work, and Nuget actually does a great job in managing it all, but it can get quite fragile if you have many Nuget references - even more so if you also have manually-maintained targets and properties in a project file for other custom build steps, or if you have a combination of managed and native (e.g. .vcxproj) files that require Nuget references. Nuget doesn't yet do well with crossing the managed-native boundary with these references, so you're usually just using Nuget to install the right package, and then manually maintaining the references yourself in the project files. In this world it's easy to make a mistake when editing files that Nuget is also trying to keep straight during package updates.

Nuget future

With the new approach, things are a lot simpler and cleaner. There's really only one file to worry about – a JSON file called project.json that replaces your packages.config file, and contains the same package name and version specifications. The neat thing is that this one file is all that Nuget needs to resolve dependencies – in your project file you no longer even need assembly references to the individual libraries delivered by the Nuget package, nor any special .targets or .props file references. At package restore time, Nuget will build a whole dependency graph of the packages that will be used in the build process, and will inject the appropriate steps into the executed build commands as necessary. You'll get warnings or errors if there are potential version conflicts for any packages.

Package files are no longer downloaded to the packages folder next to your solution – instead by default they're cached under the user's profile folder, so packages now only ever really need to be downloaded one time, and you don't have to care about excluding the packages folder from source control, because it's no longer in your source tree anyway.

Another neat advantage is that your project.json file only really needs to contain top-level dependencies – any packages that those dependencies in turn depend upon do not need to be explicitly mentioned in the project.json file, but the restore process will still correctly resolve them.

But by far the biggest win for our scenario is project.json's Floating Versions capability. This allows us to define the "greater than or equal to" version dependency with a wildcard (e.g. 1.0.*), such that during package restore, Nuget will use the latest available (1.0.X) package in the repository (by default, a specific version specification calls for an exact match). Now, when our core components are updated by the core team (at least for non-breaking changes), literally nothing (not even the project.json file) needs to change in our application and product codebase – a recompile will pick up the new versions from TeamCity automatically. Of course, when we branch Main for a specific release, we will likely update the version specifications in those branches to remove the wildcard and "fix" on a specific "official" version of our core dependencies, so that when we work on release branches to fix bugs, we can't inadvertently introduce a new core package dependency.

The new approach is available in Visual Studio 2015 Update 1. Unfortunately there's no automated way yet (that I know of) to upgrade existing projects, so I've been doing the following, based on Oren Novotny's advice:

-

Add an "empty" project.json file to the project in Visual Studio.

-

Delete the packages.config file.

-

Unload the project in Visual Studio and edit it. Remove any elements that refer to Nuget .targets or .props files, and remove any reference elements with HintPaths that point to assemblies under the old packages folder.

-

Reload the project.

-

Build the project. Watch a bunch of compilation errors fly by, but these will help to identify which Nuget packages to re-reference. On large codebases it's possible to end up with redundant references as code is refactored, so this step has actually helped us to cleanup some dependencies.

-

Right-click the project and "Manage Nuget packages". Add a reference to one of the packages for which there was a compilation error. If you actually know the dependencies between Nuget packages you can be smart here and start with the top-level ones.

-

Repeat from 5. until the build succeeds.

For a large codebase this is a lot of mechanical work, but so far the benefits have been quite positive. I may attempt to put together a VSIX context menu to automate the conversion if I get a chance, but I suspect that the Project JSON folks are already working on that and will beat me to it...

BUILD 2016: C# to get F#-like Pattern Matching

This is interesting, and should help to make C# more concise. Scroll to around the 24 minute mark.

Bookmarks in Windows Workflow Foundation

There’s an important fix in .NET 4.6 related to Workflow Foundation’s bookmarking features. The mechanisms by which workflow instances are bookmarked, suspended and resumed are fairly complex, and I don’t think the details are very well documented. Having fought through some issues myself, and received some help via MSDN forums (thanks Jim Carley) and bug reports to Microsoft, here’s how I understand bookmarks to work.

First, what is a bookmark? As its name suggests, it’s a way to remember where you were in the workflow, so that you can continue where you left off if your workflow idles (and is subsequently evicted from the runtime) for some reason.

As it transpires, there seem to be two types of bookmark. There are those that arise from the enforcement of the ordering of the operations that define the workflow (Jim calls these “protocol bookmarks”). The Receive activity is a classic example of this type of bookmark, and you can “resume” them by name (indeed, that’s how the runtime ultimately “invokes” the workflow operation due to a message coming into the service endpoint). There are also bookmarks that are not so obvious when looking at a workflow design – they’re introduced by the workflow runtime for its own housekeeping purposes (“internal bookmarks”). The Delay activity is an example of this – the runtime creates a bookmark so that the instance can resume automatically at the appropriate time. The Pick activity is another, less obvious example; here the runtime needs a bookmark that gets resumed once the Trigger of a PickBranch completes, so that it can subsequently schedule the Action for that branch. Even if the trigger also contains protocol bookmarks, or if the Action part does nothing, the internal bookmark is still required.

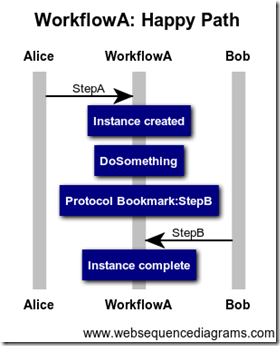

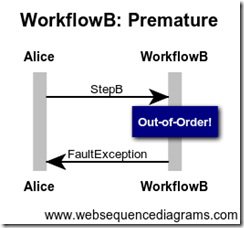

When a message for a protocol bookmark (i.e. Receive) comes in, if there’s no active protocol bookmark that matches it, ordinarily the caller will see an “out-of-order” FaultException. However, if there are also active internal bookmarks, the runtime will wait to see if that internal bookmark gets resumed (which could potentially cause the protocol bookmark that we’re actually trying to resume to become active, in which case we can just resume it, transparently to the caller). If the internal bookmark does not get resumed, eventually a TimeoutException will be returned to the caller. Consider a pre-4.6 workflow such as the following (WorkflowA):

The protocol for this workflow is simple – we want StepA to happen first, then DoSomething (which for our purposes is assumed to not create an internal bookmark at any point), and then StepB to happen only after all that. For a particular instance of this workflow, let’s consider some operation call sequences:

I think these are all fairly intuitive and work as expected.

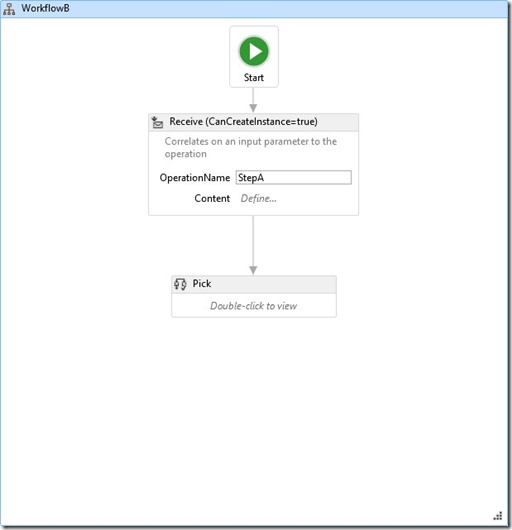

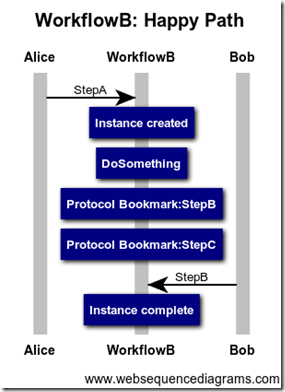

Now consider a workflow like this (WorkflowB):

Here we have a choice between two operations before the workflow instance completes. Here are our three test call sequences for this workflow:

Our first two scenarios actually work the same way in both workflows, but WorkflowB times out for the third case.

This is not intuitive and certainly not ideal, since a caller would have to wait for the timeout to expire, and wouldn’t actually be told the truth about the failure even after that.

Unfortunately with content-based correlation, this scenario is likely to happen if two separate callers attempt to open a workflow for the same correlated identity at about the same time. The first caller will succeed and create the workflow instance, the second caller will timeout. Ideally you’d want the second caller to immediately get the “Out-of-Order” exception, but you can’t guarantee that in the presence of internal bookmarks.

So, don’t use activities that use internal bookmarks. Right?

Well, they’re pretty much unavoidable if you want to have an extensible workflow framework. If you want to give domain experts the full range of activities with which to develop their workflows, banning Delay, Pick, and State are serious hindrances. Pick is especially useful for a “four-eyes” class of workflows whereby a request for an action to be performed must be reviewed and approved (or rejected) by a different user.

So, to the solution.

With 4.6 – the above workflows behave the same way as they do pre-4.6, by default. However, you can now take control of what the runtime does when it encounters a message for a Receive for which there is no active bookmark, while internal bookmarks are present. The new setting can be placed in the app.config (or web.config) file under appSettings:

<add key="microsoft:WorkflowServices:FilterResumeTimeoutInSeconds" value="60"/>

With this setting, you can specify how long the timeout should be in our “Competing Creators” scenario above. If you set it to zero, you won’t actually get a TimeoutException at all – instead you’ll get the same “Out-of-Order” FaultException you’d get in the “Premature” scenario. This seems to solve the problem nicely.

64-bit Numerical Stability with Visual C++ 2013 on FMA3-capable Haswell Chips (_set_FMA3_enable)

An interesting diversion this week, when we noticed regression tests failing on our TeamCity build server. The differences were mostly tiny, but warranted a closer inspection since there were no significant code changes to explain them, and the tests were still passing on most developer PCs. The only thing that had really changed was that we’d moved the TeamCity build agents to newer hardware (with later-model Haswell chipsets).

We were able to boil the differences in behaviour down to a simple 3-line C++ program (essentially a call to std::exp), and, sure enough, the program gave a different result when compiled in VS2013 and executed on the new Haswell CPU. Any other combination of C++ runtime and chip (and any x86 build) gave us our “expected” answer. Obviously you can’t have your build server and your developer PCs disagree when it comes to floating-point calculations without chaos (or games of baseline whack-a-mole between developers when some of them are running newer PCs than others).

We tried forcing the rounding mode with _controlfp_s, but just ended up with different differences. We tried the Intel C++ compiler (which was slightly more stable but still off between Haswell and older CPUs).

As it turns out, the VC++ 2013 runtime uses FMA3 instructions for some transcendental functions (std::exp included), when available at runtime, and this was the difference for us. After disabling this behaviour in the runtime with _set_FMA3_enable(0), our tests started passing on both types of CPU, with no other code or baseline changes necessary.

Thanks to James McNellis for pointing us in the right direction on the FMA3 optimizations.

James also noted that the FMA3 optimizations are much faster, so at some point we will experiment with enabling those, and update our baselines, but for now we can move on with stable numbers between builds.

Bug? Content-Based Correlation in Windows Workflow Foundation

This one caused me quite a bit of grief this week – maybe I can save someone else some pain. When configuring content-based correlation in the Workflow (4.0/4.5) designer, I think there’s a subtle bug in the dialog that allows you to choose the correlation key and XPath expression used to establish correlation on a Receive or SendReply activity.

It manifests itself when the workflow engine attempts to apply the correlation query at runtime:

A correlation query yielded an empty result set. Please ensure correlation queries for the endpoint are correctly configured.

It only occurs under the following conditions:

- The message or parameter that contains the correlation is a complex DataContract (i.e. not a primitive value type).

- That DataContract type is derived from another base class DataContract.

- The correlation property comes from the base class.

- The base class DataContract and the derived class DataContract are in different namespaces.

Under these conditions, the Add Correlation Initializers dialog sets the namespace of the property as being defined in the namespace of the derived class, not the base class.

Fortunately the fix is easy – you can manually edit the XAML to refer to the namespace of the base class instead, e.g:

<XPathMessageQuery x:Key="key1">

<XPathMessageQuery.Namespaces>

<ssx:XPathMessageContextMarkup>

<x:String x:Key="xg0">http://schemas.datacontract.org/2004/07/ClassLibrary2</x:String>

<x:String x:Key="xgSc">http://tempuri.org/</x:String>

</ssx:XPathMessageContextMarkup>

</XPathMessageQuery.Namespaces>sm:body()/xgSc:StartResponse/xgSc:StartResult/xg0:Id</XPathMessageQuery>Here is the sample solution. The fix is to change the value of Key xg0 to refer to ClassLibrary2 instead of ClassLibrary1 (which is what the designer wants to set it to).

Google is shutting down Reader

http://googleblog.blogspot.com/2013/03/a-second-spring-of-cleaning.html

Wait, what? This is Google’s best product, and they’re shit-canning it?

This has to rank up there with Fox’s decision to cancel Firefly…

What’s My Super Bowl Pool Box Worth?

A bit of fun for this Super Bowl weekend. If you’re unfamiliar with a Super Bowl pool, the basics are simple:

Draw a 10 x 10 grid. One axis represents the AFC team, the other represents the NFC team. Participants pay a fixed amount for each of the 100 boxes. A payoff is set for each quarter of the game. Each row of the grid is randomly assigned a digit from 0-9, and likewise for each column of the grid. At the end of each quarter of the game, the final digit of each team’s score is used to determine the winning box for that quarter, and the owner of that box gets the loot.

The interesting question for box owners after the box numbers are assigned is: How much am I likely to win with these numbers? Moreover, if you’d entered the same pool every year since the Super Bowl began (ie. a pool with the same per-quarter payoffs), and happened to get the same numbers every year, how much would you have won in total? Obviously this is a contrived scenario, but an analysis of the history of Super Bowl scores makes for some interesting observations.

The nature of the scoring system in American Football is obviously the biggest factor in determining the “value” of a box: 3 (field goal) & 7 (touchdown + extra point), and their multiples, 6, 9, 4 and 1 seem likely to be winners. Also, the person who owns the 0-0 box stands to potentially win the entire pot before a ball is kicked or thrown, if two lousy offenses can’t score, or if the scoring goes in sets of touchdown, extra point, field goal. But if you think you have good numbers, a safety or a missed extra point are likely to ruin your day.

So, how much is my box worth in the above scenario?

// Per-quarter points scored for every superbowl - AFC,NFC

let quarterPoints = [

([ 0;10; 0; 0],[ 7; 7;14; 7]);

([ 0; 7; 0; 7],[ 3;13;10; 7]);

([ 0; 7; 6; 3],[ 0; 0; 0; 7]);

([ 3;13; 7; 0],[ 0; 0; 7; 0]);

([ 0; 6; 0;10],[ 3;10; 0; 0]);

([ 0; 3; 0; 0],[ 3; 7; 7; 7]);

([ 7; 7; 0; 0],[ 0; 0; 0; 7]);

([14; 3; 7; 0],[ 0; 0; 0; 7]);

([ 0; 2; 7; 7],[ 0; 0; 0; 6]);

([ 7; 0; 0;14],[ 7; 3; 0; 7]);

([ 0;16; 3;13],[ 0; 0; 7; 7]);

([ 0; 0;10; 0],[10; 3; 7; 7]);

([ 7;14; 0;14],[ 7; 7; 3;14]);

([ 3; 7; 7;14],[ 7; 6; 6; 0]);

([14; 0;10; 3],[ 0; 3; 0; 7]);

([ 0; 0; 7;14],[ 7;13; 0; 6]);

([ 7;10; 0; 0],[ 0;10; 3;14]);

([ 7;14;14; 3],[ 0; 3; 6; 0]);

([10; 6; 0; 0],[ 7;21;10; 0]);

([ 3; 0; 0; 7],[13;10;21; 2]);

([10; 0; 0;10],[ 7; 2;17;13]);

([10; 0; 0; 0],[ 0;35; 0; 7]);

([ 0; 3;10; 3],[ 3; 0; 3;14]);

([ 3; 0; 7; 0],[13;14;14;14]);

([ 3; 9; 0; 7],[ 3; 7; 7; 3]);

([ 0; 0;10;14],[ 0;17;14; 6]);

([ 7; 3; 7; 0],[14;14; 3;21]);

([ 3;10; 0; 0],[ 6; 0;14;10]);

([ 7; 3; 8; 8],[14;14;14; 7]);

([ 0; 7; 0;10],[10; 3; 7; 7]);

([14; 0; 7; 0],[10;17; 8; 0]);

([ 7;10; 7; 7],[ 7; 7; 3; 7]);

([ 7;10; 0;17],[ 3; 3; 0;13]);

([ 0; 0; 6;10],[ 3; 6; 7; 7]);

([ 7; 3;14;10],[ 0; 0; 7; 0]);

([ 0;14; 3; 3],[ 3; 0; 0;14]);

([ 3; 0; 6;12],[ 3;17;14;14]);

([ 0;14; 0;18],[ 0;10; 0;19]);

([ 0; 7; 7;10],[ 0; 7; 7; 7]);

([ 0; 7; 7; 7],[ 3; 0; 7; 0]);

([ 6;10; 6; 7],[14; 0; 3; 0]);

([ 0; 7; 0; 7],[ 3; 0; 0;14]);

([ 3;14; 3; 7],[ 0; 7; 0;16]);

([10; 0; 7; 0],[ 0; 6;10;15]);

([ 0;10; 7; 8],[14; 7; 0;10]);

([ 9; 0; 6; 6],[ 0;10; 7; 0]);

([ 3; 3;17; 8],[ 7;14; 7; 6]);

([ 8;14;14; 7],[ 0; 0; 8; 0]);

([ 0;14;10; 0],[ 0;14; 0;14]);

([ 0; 7; 0; 3],[10; 3; 3; 8]);

([ 0;21; 7; 0],[ 0; 3; 6;19]);

([ 9;13; 7;12],[ 3; 9;14; 7]);

([ 0; 0; 3; 0],[ 0; 3; 0;10]);

([ 3; 7;10; 0],[ 7; 3; 0;21]);

]

// Dollars to win at the end of each quarter

let prizes = [| 50; 125; 75; 250 |]

let total points = Seq.scan (+) (List.head points) (List.tail points)

let modulo = fun i -> i % 10

let modulos points = Seq.map modulo (total points)

let quarterModulos = Seq.map (fun (afc, nfc) -> modulos afc, modulos nfc) quarterPoints

let payoff : int[,] = Array2D.zeroCreate 10 10

let payQuarter (afc, nfc, prize) = payoff.[nfc,afc] <- payoff.[nfc,afc] + prize

let payGame (afc, nfc) = Seq.iter payQuarter (Seq.zip3 afc nfc prizes)

Seq.iter payGame quarterModulos

for i in 0 .. 9 do

for j in 0 .. 9 do

printf "%5d" payoff.[i,j]

printf "\n"

This snippet is also runnable at repl.it. The results are interesting:

43% of the possible outcomes seem to have never occurred in the Super Bowl.

0 and 7 are indeed big winners, and 7 – 4 is by far the most valuable box. However, its reciprocal 4 – 7 is one of the least valuable of the winning boxes.

Another Simple Way to Tinker with F#

From Microsoft Research – tryfsharp.org, a very cool site for testing and sharing F# code, complete with a built-in JavaScript charting engine. I just posted the Discount Curve sample to try it out.

Can’t figure out how to get the time axis to render dates, though - the DoJo chart needs the data to be numeric, and I don’t see a way to use a label function to do the conversion back to date, although the library itself seems to support it…

How strong are your development standards?

Having worked for a number of years on real-time process control systems (think: device drivers and management information applications for monitoring and controlling large-scale industrial processes such as oil & gas refinement, chemical and food production, etc.), the recent years I’ve spent working on financial software systems have highlighted quite a few differences for me with regard to how the two industries approach quality assurance.

Going into finance, I had a certain expectation that there would be many aspects of the discipline that would align quite closely with those of process control – the quantity of data to manage; the timely calculation and analysis of statistics; the automation of feedback control in response to stimuli, etc. – all would seem to be equally important when opening and closing valves and switches as they are when buying and selling financial instruments.

Human beings can die as a result of a hardware or software glitch in a critical control system of an industrial process. Back when I was working in that area, hardware and software manufacturers usually tended to be very much concerned about the quality of their offerings, and purchasers of those products almost always tested the crap out of them for long periods before trusting them in production. [Not so much for the management information systems, but certainly for the low-level stuff].

The catastrophic failure of a trading system (as we saw recently with a certain Knight Capital) is not, let’s face it, going to literally kill anyone, but the costs of those failures can adversely impact a great many people in many different ways. One would think that quality assurance, software testing and development standards would be of great importance in these systems, too. But my experience in finance has suggested to me that that is often not the case.

Anecdotally, I get the sense that the problem is perhaps worse with the in-house developments of practitioners (banks, funds), than it is with the products of ISVs, but really not by much, maybe because there is often much migration of personnel between the two.

And then, this week, having sent a device that’s roughly the size and weight of a Mini Cooper through space for nine months, we parked it safely on Mars. I say we, meaning human beings, but it was really mostly down to a select bunch of humans much smarter than we, over at NASA. Maybe it’s just me, but I’m still amazed that something like this is possible by the same species that gave us the Synthetic CDO.

So, what kind of development standards are required in order to pull something like this off? [Meaning the Mars thing, of course, and not the CDO thing.]

Some of them are right here, take a look: JPL Institutional Coding Standard for the C Programming Language

It’s a fantastic read – clearly written, very approachable and not long or dense (20 pages or so) -- but here’s the summary:

How do your standards measure up? Are they even documented as nicely?

Never Underestimate the Awesomeness of .NET Reflector

I'm sure that anyone who's spent a significant amount of time developing .NET applications is well aware of .NET Reflector - a static analysis tool originally developed by Lutz Roeder, but now owned by Red Gate Software. I think that by now Reflector is so widely used and so fundamentally useful that we tend to take it for granted. A recent "Subversion malfunction", however, has renewed my appreciation for Reflector.

About six months ago I had thrown together a Visual Studio debugger visualizer for one of our more complicated domain objects. Not a great deal of code; about seven or eight classes, with a WPF (ElementHost-ed) view, view model and some serialization plumbing. I guess I had got it working, used it a couple of times for a specific debugging session, and then promptly forgot all about it and moved back onto deliverable features.

At some point since then, I think I may have been overly-aggressive in the use of my hand-rolled Powershell Cmdlet "Remove-SvnUncontrolled" on that working copy, because this week I found a need for that debugger visualizer again, and could not find the source code anywhere on my hard drive. However, I did have the old compiled assembly of the visualizer in my C:\Program Files (x86)\Microsoft Visual Studio 9.0\Common7\Packages\Debugger\Visualizers folder, and opened it up in Reflector.

I had thought that maybe, at best, Reflector would be able to give me the gist of the code, and I could re-write the thing in about half a day's work. In fact, I was able to literally copy-paste entire classes from Reflector into a new project, add a couple of missing metadata attributes, add assembly references, and get the visualizer compiling again. The only things missing were the icons and the XAML markup of the view (which is, of course, the entire view if you’re doing MVVM properly). I figured I’d just have to craft that again from first principles.

Then, I remembered that Reflector can give you the resources from the compiled assembly, too, and that the XAML is just compiled to BAML and stored as an embedded resource.

Then, I found this cool Reflector addin, which decompiles the BAML resource back to usable XAML.

All in all, I resurrected my lost visualizer project in under thirty minutes.

Silverlight Downfall

The "Hitler Reacts..." meme has been making the rounds for a while now, but this one I can really identify with:

Discount/Zero Curve Construction in F# – Update

For convenience of copy-paste and experimentation, I’ve posted a full working snippet (if that’s the right word – it’s over 400 lines!) of the discount curve bootstrapper to the excellent fssnip.net site.

open System.Text.RegularExpressions

type Date = System.DateTime

let date d = System.DateTime.Parse(d)

type Period = { startDate:Date; endDate:Date }

type Calendar = { weekendDays:System.DayOfWeek Set; holidays:Date Set }

type Tenor = { years:int; months:int; days:int }

let tenor t =

let regex s = new Regex(s)

let pattern = regex ("(?<weeks>[0-9]+)W" +

"|(?<years>[0-9]+)Y(?<months>[0-9]+)M(?<days>[0-9]+)D" +

"|(?<years>[0-9]+)Y(?<months>[0-9]+)M" +

"|(?<months>[0-9]+)M(?<days>[0-9]+)D" +

"|(?<years>[0-9]+)Y" +

"|(?<months>[0-9]+)M" +

"|(?<days>[0-9]+)D")

let m = pattern.Match(t)

if m.Success then

{ new Tenor with

years = (if m.Groups.["years"].Success

then int m.Groups.["years"].Value

else 0)

and months = (if m.Groups.["months"].Success

then int m.Groups.["months"].Value

else 0)

and days = (if m.Groups.["days"].Success

then int m.Groups.["days"].Value

else if m.Groups.["weeks"].Success

then int m.Groups.["weeks"].Value * 7

else 0) }

else

failwith "Invalid tenor format. Valid formats include 1Y 3M 7D 2W 1Y6M, etc"

let offset tenor (date:Date) =

date.AddDays(float tenor.days)

.AddMonths(tenor.months)

.AddYears(tenor.years)

let findNthWeekDay n weekDay (date:Date) =

let mutable d = new Date(date.Year, date.Month, 1)

while d.DayOfWeek <> weekDay do d <- d.AddDays(1.0)

for i = 1 to n - 1 do d <- d.AddDays(7.0)

if d.Month = date.Month then

d

else

failwith "No such day"

// Assume ACT/360 day count convention

let Actual360 period = double (period.endDate - period.startDate).Days / 360.0

let rec schedule frequency period =

seq {

yield period.startDate

let next = frequency period.startDate

if (next <= period.endDate) then

yield! schedule frequency { startDate = next; endDate = period.endDate }

}

let semiAnnual (from:Date) = from.AddMonths(6)

let isBusinessDay (date:Date) calendar =

not (calendar.weekendDays.Contains date.DayOfWeek || calendar.holidays.Contains date)

type RollRule =

| Actual = 0

| Following = 1

| Previous = 2

| ModifiedFollowing = 3

| ModifiedPrevious = 4

let dayAfter (date:Date) = date.AddDays(1.0)

let dayBefore (date:Date) = date.AddDays(-1.0)

let deriv f x =

let dx = (x + max (1e-6 * x) 1e-12)

let fv = f x

let dfv = f dx

if (dx <= x) then

(dfv - fv) / 1e-12

else

(dfv - fv) / (dx - x)

// Newton's method with separate functions for f and df

let newton f (guess:double) =

guess - f guess / deriv f guess

// Simple recursive solver for Newton's method with separate functions for f and df, to a given accuracy

let rec solveNewton f accuracy guess =

let root = (newton f guess)

if abs(root - guess) < accuracy then root else solveNewton f accuracy root

// Assume Log-Linear interpolation

let logarithmic (sampleDate:Date) highDp lowDp =

let (lowDate:Date), lowFactor = lowDp

let (highDate:Date), highFactor = highDp

lowFactor *

((highFactor / lowFactor) **

(double (sampleDate - lowDate).Days / double (highDate - lowDate).Days))

let rec roll rule calendar date =

if isBusinessDay date calendar then

date

else

match rule with

| RollRule.Actual -> date

| RollRule.Following -> dayAfter date |> roll rule calendar

| RollRule.Previous -> dayBefore date |> roll rule calendar

| RollRule.ModifiedFollowing ->

let next = roll RollRule.Following calendar date

if next.Month <> date.Month then

roll RollRule.Previous calendar date

else

next

| RollRule.ModifiedPrevious ->

let prev = roll RollRule.Previous calendar date

if prev.Month <> date.Month then

roll RollRule.Following calendar date

else

prev

| _ -> failwith "Invalid RollRule"

let rec rollBy n rule calendar (date:Date) =

match n with

| 0 -> date

| x ->

match rule with

| RollRule.Actual -> date.AddDays(float x)

| RollRule.Following -> dayAfter date

|> roll rule calendar

|> rollBy (x - 1) rule calendar

| RollRule.Previous -> roll rule calendar date

|> dayBefore

|> roll rule calendar

|> rollBy (x - 1) rule calendar

| RollRule.ModifiedFollowing ->

// Roll n-1 days Following

let next = rollBy (x - 1) RollRule.Following calendar date

// Roll the last day ModifiedFollowing

let final = roll RollRule.Following calendar (dayAfter next)

if final.Month <> next.Month then

roll RollRule.Previous calendar next

else

final

| RollRule.ModifiedPrevious ->

// Roll n-1 days Previous

let next = rollBy (x - 1) RollRule.Previous calendar date

// Roll the last day ModifiedPrevious

let final = roll RollRule.Previous calendar (dayAfter next)

if final.Month <> next.Month then

roll RollRule.Following calendar next

else

final

| _ -> failwith "Invalid RollRule"

let rec findDf interpolate sampleDate =

function

// exact match

(dpDate:Date, dpFactor:double) :: tail when dpDate = sampleDate

-> dpFactor

// falls between two points - interpolate

| (highDate:Date, highFactor:double) :: (lowDate:Date, lowFactor:double) :: tail

when lowDate < sampleDate && sampleDate < highDate

-> interpolate sampleDate (highDate, highFactor) (lowDate, lowFactor)

// recurse

| head :: tail -> findDf interpolate sampleDate tail

// falls outside the curve

| [] -> failwith "Outside the bounds of the discount curve"

let findPeriodDf period discountCurve =

let payDf = findDf logarithmic period.endDate discountCurve

let valueDf = findDf logarithmic period.startDate discountCurve

payDf / valueDf

let computeDf dayCount fromDf toQuote =

let dpDate, dpFactor = fromDf

let qDate, qValue = toQuote

(qDate, dpFactor * (1.0 /

(1.0 + qValue * dayCount { startDate = dpDate;

endDate = qDate })))

// Just to compute f(guess)

let computeSwapDf dayCount spotDate swapQuote discountCurve swapSchedule (guessDf:double) =

let qDate, qQuote = swapQuote

let guessDiscountCurve = (qDate, guessDf) :: discountCurve

let spotDf = findDf logarithmic spotDate discountCurve

let swapDf = findPeriodDf { startDate = spotDate; endDate = qDate } guessDiscountCurve

let swapVal =

let rec _computeSwapDf a spotDate qQuote guessDiscountCurve =

function

swapPeriod :: tail ->

let couponDf = findPeriodDf { startDate = spotDate;

endDate = swapPeriod.endDate } guessDiscountCurve

_computeSwapDf (couponDf * (dayCount swapPeriod) * qQuote + a)

spotDate qQuote guessDiscountCurve tail

| [] -> a

_computeSwapDf -1.0 spotDate qQuote guessDiscountCurve swapSchedule

spotDf * (swapVal + swapDf)

[<Measure>] type bp

[<Measure>] type percent

[<Measure>] type price

let convertPercentToRate (x:float<percent>) = x / 100.0<percent>

let convertPriceToRate (x:float<price>) = (100.0<price> - x) / 100.0<price>

type InterestRateQuote =

| Rate of float

| Percent of float<percent>

| BasisPoints of float<bp>

with

member x.ToRate() =

match x with

| Rate r -> r

| Percent p -> p / 100.0<percent>

| BasisPoints bp -> bp / 10000.0<bp>

member x.ToPercentage() =

match x with

| Rate r -> r * 100.0<percent>

| Percent p -> p

| BasisPoints bp -> bp / 100.0<bp/percent>

member x.ToBasisPoints() =

match x with

| Rate r -> r * 10000.0<bp>

| Percent p -> p * 100.0<bp/percent>

| BasisPoints bp -> bp

end

type FuturesContract = Date

let contract d = date d

type QuoteType =

| Overnight // the overnight rate (one day period)

| TomorrowNext // the one day period starting "tomorrow"

| TomorrowTomorrowNext // the one day period starting the day after "tomorrow"

| Cash of Tenor // cash deposit period in days, weeks, months

| Futures of FuturesContract // year and month of futures contract expiry

| Swap of Tenor // swap period in years

// Bootstrap the next discount factor from the previous one

let rec bootstrap dayCount quotes discountCurve =

match quotes with

quote :: tail ->

let newDf = computeDf dayCount (List.head discountCurve) quote

bootstrap dayCount tail (newDf :: discountCurve)

| [] -> discountCurve

// Generate the next discount factor from a fixed point on the curve

// (cash points are wrt to spot, not the previous df)

let rec bootstrapCash dayCount spotDate quotes discountCurve =

match quotes with

quote :: tail ->

let spotDf = (spotDate, findDf logarithmic spotDate discountCurve)

let newDf = computeDf dayCount spotDf quote

bootstrapCash dayCount spotDate tail (newDf :: discountCurve)

| [] -> discountCurve

let bootstrapFutures dayCount futuresStartDate quotes discountCurve =

match futuresStartDate with

| Some d ->

bootstrap dayCount

(Seq.toList quotes)

((d, findDf logarithmic d discountCurve) :: discountCurve)

| None -> discountCurve

// Swaps are computed from a schedule generated from spot and priced

// according to the curve built thusfar

let rec bootstrapSwaps dayCount spotDate calendar swapQuotes discountCurve =

match swapQuotes with

(qDate, qQuote) :: tail ->

// build the schedule for this swap

let swapDates = schedule semiAnnual { startDate = spotDate; endDate = qDate }

let rolledSwapDates = Seq.map (fun (d:Date) -> roll RollRule.Following calendar d)

swapDates

let swapPeriods = Seq.toList (Seq.map (fun (s, e) ->

{ startDate = s;

endDate = e }) (Seq.pairwise rolledSwapDates))

// solve

let accuracy = 1e-12

let spotFactor = findDf logarithmic spotDate discountCurve

let f = computeSwapDf dayCount spotDate (qDate, qQuote) discountCurve swapPeriods

let newDf = solveNewton f accuracy spotFactor

bootstrapSwaps dayCount spotDate calendar tail ((qDate, newDf) :: discountCurve)

| [] -> discountCurve

let USD = { weekendDays = Set [ System.DayOfWeek.Saturday; System.DayOfWeek.Sunday ];

holidays = Set [ date "2009-01-01";

date "2009-01-19";

date "2009-02-16";

date "2009-05-25";

date "2009-07-03";

date "2009-09-07";

date "2009-10-12";

date "2009-11-11";

date "2009-11-26";

date "2009-12-25" ] }

let curveDate = date "2009-05-01"

let spotDate = rollBy 2 RollRule.Following USD curveDate

let quotes = [ (Overnight, 0.045);

(TomorrowNext, 0.045);

(Cash (tenor "1W"), 0.0462);

(Cash (tenor "2W"), 0.0464);

(Cash (tenor "3W"), 0.0465);

(Cash (tenor "1M"), 0.0467);

(Cash (tenor "3M"), 0.0493);

(Futures (contract "Jun2009"), 95.150);

(Futures (contract "Sep2009"), 95.595);

(Futures (contract "Dec2009"), 95.795);

(Futures (contract "Mar2010"), 95.900);

(Futures (contract "Jun2010"), 95.910);

(Swap (tenor "2Y"), 0.04404);

(Swap (tenor "3Y"), 0.04474);

(Swap (tenor "4Y"), 0.04580);

(Swap (tenor "5Y"), 0.04686);

(Swap (tenor "6Y"), 0.04772);

(Swap (tenor "7Y"), 0.04857);

(Swap (tenor "8Y"), 0.04924);

(Swap (tenor "9Y"), 0.04983);

(Swap (tenor "10Y"), 0.0504);

(Swap (tenor "12Y"), 0.05119);

(Swap (tenor "15Y"), 0.05201);

(Swap (tenor "20Y"), 0.05276);

(Swap (tenor "25Y"), 0.05294);

(Swap (tenor "30Y"), 0.05306) ]

let spotPoints = quotes

|> List.choose (fun (t, q) ->

match t with

| Overnight _ -> Some (rollBy 1 RollRule.Following USD curveDate, q)

| TomorrowNext _ -> Some (rollBy 2 RollRule.Following USD curveDate, q)

| TomorrowTomorrowNext _ -> Some (rollBy 3 RollRule.Following USD curveDate, q)

| _ -> None)

|> List.sortBy (fun (d, _) -> d)

let cashPoints = quotes

|> List.choose (fun (t, q) ->

match t with

| Cash c -> Some (offset c spotDate |> roll RollRule.Following USD, q)

| _ -> None)

|> List.sortBy (fun (d, _) -> d)

let futuresQuotes = quotes

|> List.choose (fun (t, q) ->

match t with

| Futures f -> Some (f, q)

| _ -> None)

|> List.sortBy (fun (c, _) -> c)

let (sc, _) = List.head futuresQuotes

let (ec, _) = futuresQuotes.[futuresQuotes.Length - 1]

let futuresStartDate = findNthWeekDay 3 System.DayOfWeek.Wednesday sc

|> roll RollRule.ModifiedFollowing USD

let futuresEndDate = (new Date(ec.Year, ec.Month, 1)).AddMonths(3)

// "invent" an additional contract to capture the end of the futures schedule

let endContract = (futuresEndDate, 0.0)

let futuresPoints = Seq.append futuresQuotes [endContract]

|> Seq.pairwise

|> Seq.map (fun ((_, q1), (c2, _)) ->

(findNthWeekDay 3 System.DayOfWeek.Wednesday c2

|> roll RollRule.ModifiedFollowing USD, (100.0 - q1) / 100.0))

|> Seq.toList

let swapPoints = quotes

|> List.choose (fun (t, q) ->

match t with

| Swap s -> Some (offset s spotDate |> roll RollRule.Following USD, q)

| _ -> None)

|> List.sortBy (fun (d, _) -> d)

let discountFactors = [ (curveDate, 1.0) ]

|> bootstrap Actual360 spotPoints

|> bootstrapCash Actual360 spotDate cashPoints

|> bootstrapFutures Actual360 (Some futuresStartDate) futuresPoints

|> bootstrapSwaps Actual360 spotDate USD swapPoints

|> Seq.sortBy (fun (qDate, _) -> qDate)

printfn "Discount Factors"

Seq.iter (fun (d:Date, v) -> printfn "\t%s\t%.13F" (d.ToString("yyyy-MM-dd")) v) discountFactors

let zeroCouponRates = discountFactors

|> Seq.map (fun (d, f)

-> (d, 100.0 * -log(f) * 365.25 / double (d - curveDate).Days))

printfn "Zero-Coupon Rates"

Seq.iter (fun (d:Date, v) -> printfn "\t%s\t%.13F" (d.ToString("yyyy-MM-dd")) v) zeroCouponRates

IE9 “Pin to Taskbar” Feature - Am I Missing Something?

The new “Pin to Taskbar” feature in IE9 seems to be, on the surface, a neat idea. You can drag the “favicon” of a website down to the (Windows 7) taskbar, and then subsequently launch it like an application with a single click.

There’s only one problem. It’s completely useless. I’m sure I must be missing something head-slappingly simple.

The instance of IE that launches to host the “pinned” site doesn’t seem to load any plugins. The most important plugin (for me) is LastPass, which securely and automatically logs me in to sites using a locally cached password. So, usually, I’d access my Hotmail (for instance) via a bookmark, and I’d be logged in and ready to go, but via an IE9 icon pinned to the taskbar, I’m presented with the login page every time. It doesn’t even have my email address pre-populated (even though I have the “Remember Me” box checked), let alone the password.

So it’s actually several orders of magnitude slower to use a pinned icon, than to just do it the old way, by clicking the IE (or Chrome) icon and then a bookmark.